Back In The 1960s, The U.S. Started Vaccinating Kids For Measles. As Expected, Children Stopped Getting

Back in the 1960s, the U.S. started vaccinating kids for measles. As expected, children stopped getting measles.

But something else happened.

Childhood deaths from all infectious diseases plummeted. Even deaths from diseases like pneumonia and diarrhea were cut by half.

“So it’s really been a mystery — why do children stop dying at such high rates from all these different infections following introduction of the measles vaccine,” says Michael Mina, a postdoc in biology at Princeton University and a medical student at Emory University.

Scientists Crack A 50-Year-Old Mystery About The Measles Vaccine Photo credit: Photofusion/UIG via Getty Images

More Posts from Science-is-magical and Others

It’s way too late for this, but it’s important to note that NASA didn’t discover the new earth-like planets. It was a group of astronomers lead by a dude name Michaël Gillon from the University of Liège in Belgium. Giving NASA credit for this gives the United States credit for something they didn’t do, and we already have a problem with making things about ourselves so. just like…be mindful. I’d be pissed if I discovered a small solar system and credit was wrongfully given to someone else.

A guy asks an engineer “hey what 2 + 2?”

Engineer responds “4. No wait make 5 just to be on the safe side.”

cyanobacterium: i have made Oxygen

chemotrophs: you fucked up a perfectly good planet is what you did. look at it. it’s all rusty

English-speaking parents tend to use vague, one-size-fits-all verbs as they emphasize nouns: cars, trucks, buses, bicycles and scooters all simply “go.” Mandarin speakers do the opposite: they use catchall nouns such as “vehicle” but describe action—driving, riding, sitting on, pushing—with very specific verbs. “As a native English speaker, my first instinct when a baby points is to label,” Tardif says. Her babysitter, on the other hand, was a native Mandarin speaker, whose instinct was to name the action she thought the child was trying to achieve.

via Twitter

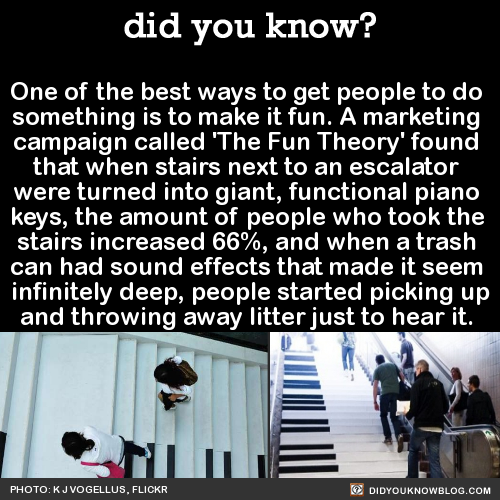

One of the best ways to get people to do something is to make it fun. A marketing campaign called ‘The Fun Theory’ found that when stairs next to an escalator were turned into giant, functional piano keys, the amount of people who took the stairs increased 66%, and when a trash can had sound effects that made it seem infinitely deep, people started picking up and throwing away litter just to hear it. Source

Paint colors designed by neural network, Part 2

So it turns out you can train a neural network to generate paint colors if you give it a list of 7,700 Sherwin-Williams paint colors as input. How a neural network basically works is it looks at a set of data - in this case, a long list of Sherwin-Williams paint color names and RGB (red, green, blue) numbers that represent the color - and it tries to form its own rules about how to generate more data like it.

Last time I reported results that were, well… mixed. The neural network produced colors, all right, but it hadn’t gotten the hang of producing appealing names to go with them - instead producing names like Rose Hork, Stanky Bean, and Turdly. It also had trouble matching names to colors, and would often produce an “Ice Gray” that was a mustard yellow, for example, or a “Ferry Purple” that was decidedly brown.

These were not great names.

There are lots of things that affect how well the algorithm does, however.

One simple change turns out to be the “temperature” (think: creativity) variable, which adjusts whether the neural network always picks the most likely next character as it’s generating text, or whether it will go with something farther down the list. I had the temperature originally set pretty high, but it turns out that when I turn it down ever so slightly, the algorithm does a lot better. Not only do the names better match the colors, but it begins to reproduce color gradients that must have been in the original dataset all along. Colors tend to be grouped together in these gradients, so it shifts gradually from greens to browns to blues to yellows, etc. and does eventually cover the rainbow, not just beige.

Apparently it was trying to give me better results, but I kept screwing it up.

Raw output from RGB neural net, now less-annoyed by my temperature setting

People also sent in suggestions on how to improve the algorithm. One of the most-frequent was to try a different way of representing color - it turns out that RGB (with a single color represented by the amount of Red, Green, and Blue in it) isn’t very well matched to the way human eyes perceive color.

These are some results from a different color representation, known as HSV. In HSV representation, a single color is represented by three numbers like in RGB, but this time they stand for Hue, Saturation, and Value. You can think of the Hue number as representing the color, Saturation as representing how intense (vs gray) the color is, and Value as representing the brightness. Other than the way of representing the color, everything else about the dataset and the neural network are the same. (char-rnn, 512 neurons and 2 layers, dropout 0.8, 50 epochs)

Raw output from HSV neural net:

And here are some results from a third color representation, known as LAB. In this color space, the first number stands for lightness, the second number stands for the amount of green vs red, and the third number stands for the the amount of blue vs yellow.

Raw output from LAB neural net:

It turns out that the color representation doesn’t make a very big difference in how good the results are (at least as far as I can tell with my very simple experiment). RGB seems to be surprisingly the best able to reproduce the gradients from the original dataset - maybe it’s more resistant to disruption when the temperature setting introduces randomness.

And the color names are pretty bad, no matter how the colors themselves are represented.

However, a blog reader compiled this dataset, which has paint colors from other companies such as Behr and Benjamin Moore, as well as a bunch of user-submitted colors from a big XKCD survey. He also changed all the names to lowercase, so the neural network wouldn’t have to learn two versions of each letter.

And the results were… surprisingly good. Pretty much every name was a plausible match to its color (even if it wasn’t a plausible color you’d find in the paint store). The answer seems to be, as it often is for neural networks: more data.

Raw output using The Big RGB Dataset:

I leave you with the Hall of Fame:

RGB:

HSV:

LAB:

Big RGB dataset:

Now we know the (proposed) names of the four new elements, here’s an updated graphic with more information on each! High-res image/PDF: http://wp.me/p4aPLT-1Eg

-

nervousgarbage liked this · 2 weeks ago

nervousgarbage liked this · 2 weeks ago -

sirkflowers reblogged this · 1 month ago

sirkflowers reblogged this · 1 month ago -

dreadfutures liked this · 1 month ago

dreadfutures liked this · 1 month ago -

imasithduh reblogged this · 1 month ago

imasithduh reblogged this · 1 month ago -

fiadhaisteach reblogged this · 1 month ago

fiadhaisteach reblogged this · 1 month ago -

panicattackunleashed reblogged this · 1 month ago

panicattackunleashed reblogged this · 1 month ago -

paula-in-dreamland reblogged this · 1 month ago

paula-in-dreamland reblogged this · 1 month ago -

thelettersfromnoone reblogged this · 1 month ago

thelettersfromnoone reblogged this · 1 month ago -

dimondlite reblogged this · 2 months ago

dimondlite reblogged this · 2 months ago -

lizarddiesandgoestohell liked this · 2 months ago

lizarddiesandgoestohell liked this · 2 months ago -

aroaceaspie13 reblogged this · 2 months ago

aroaceaspie13 reblogged this · 2 months ago -

tanukikawaii liked this · 2 months ago

tanukikawaii liked this · 2 months ago -

jezabellesunshine reblogged this · 3 months ago

jezabellesunshine reblogged this · 3 months ago -

subtleworldlytruths reblogged this · 3 months ago

subtleworldlytruths reblogged this · 3 months ago -

fenestravitae reblogged this · 4 months ago

fenestravitae reblogged this · 4 months ago -

ibidflash reblogged this · 4 months ago

ibidflash reblogged this · 4 months ago -

luengine liked this · 4 months ago

luengine liked this · 4 months ago -

halfwrittenandread reblogged this · 4 months ago

halfwrittenandread reblogged this · 4 months ago -

halfwrittenandread liked this · 4 months ago

halfwrittenandread liked this · 4 months ago -

jakeh42 reblogged this · 4 months ago

jakeh42 reblogged this · 4 months ago -

mrtylers reblogged this · 4 months ago

mrtylers reblogged this · 4 months ago -

thotsandpreyers reblogged this · 4 months ago

thotsandpreyers reblogged this · 4 months ago -

fatfemme-inist reblogged this · 4 months ago

fatfemme-inist reblogged this · 4 months ago -

constanicky reblogged this · 4 months ago

constanicky reblogged this · 4 months ago -

shhdontlook reblogged this · 4 months ago

shhdontlook reblogged this · 4 months ago -

fakeeldestdaughter liked this · 4 months ago

fakeeldestdaughter liked this · 4 months ago -

darkmagyk reblogged this · 4 months ago

darkmagyk reblogged this · 4 months ago -

vincennesdimanche reblogged this · 4 months ago

vincennesdimanche reblogged this · 4 months ago -

tumbloscope reblogged this · 4 months ago

tumbloscope reblogged this · 4 months ago -

scarletlegionnaire liked this · 4 months ago

scarletlegionnaire liked this · 4 months ago -

anasorasblog liked this · 5 months ago

anasorasblog liked this · 5 months ago -

ash-pige reblogged this · 5 months ago

ash-pige reblogged this · 5 months ago -

ash-pige liked this · 5 months ago

ash-pige liked this · 5 months ago -

daemosdaen reblogged this · 5 months ago

daemosdaen reblogged this · 5 months ago -

daemosdaen liked this · 5 months ago

daemosdaen liked this · 5 months ago -

zeldadiarist liked this · 5 months ago

zeldadiarist liked this · 5 months ago -

pamshortsbrokenbothherlegs reblogged this · 5 months ago

pamshortsbrokenbothherlegs reblogged this · 5 months ago -

pamshortsbrokenbothherlegs liked this · 5 months ago

pamshortsbrokenbothherlegs liked this · 5 months ago -

linktheacehero reblogged this · 5 months ago

linktheacehero reblogged this · 5 months ago -

improperbostonian reblogged this · 5 months ago

improperbostonian reblogged this · 5 months ago -

dawn-the-rithmatist liked this · 5 months ago

dawn-the-rithmatist liked this · 5 months ago -

chintznibbles liked this · 5 months ago

chintznibbles liked this · 5 months ago -

dykevirgo reblogged this · 5 months ago

dykevirgo reblogged this · 5 months ago -

floppy-big-naturals reblogged this · 5 months ago

floppy-big-naturals reblogged this · 5 months ago -

adelineladanishfox liked this · 5 months ago

adelineladanishfox liked this · 5 months ago -

captaindragonsgold reblogged this · 5 months ago

captaindragonsgold reblogged this · 5 months ago -

captaindragonsgold liked this · 5 months ago

captaindragonsgold liked this · 5 months ago -

educationalstripclub liked this · 5 months ago

educationalstripclub liked this · 5 months ago -

loiteringdiligently reblogged this · 5 months ago

loiteringdiligently reblogged this · 5 months ago

![Source [x]](https://64.media.tumblr.com/c67e16b83f88fe48da4656147229f385/tumblr_nz90mw4OiA1u1i4d4o1_500.jpg)

![Source [x]](https://64.media.tumblr.com/a60c74bcd55fb8555c574abb6eb4a0aa/tumblr_nz90mw4OiA1u1i4d4o2_500.jpg)

![Source [x]](https://64.media.tumblr.com/35e92a53a2e18de1283034cd6d7999ea/tumblr_nz90mw4OiA1u1i4d4o3_500.jpg)

![Source [x]](https://64.media.tumblr.com/4c1566aefd626f49bf3bfaf0c2e04616/tumblr_nz90mw4OiA1u1i4d4o4_500.jpg)

![Source [x]](https://64.media.tumblr.com/61c3673d595a1d1ddb2bd9c6d25862ae/tumblr_nz90mw4OiA1u1i4d4o5_500.jpg)

![Source [x]](https://64.media.tumblr.com/3830b9a2ede2b1021bb8596b1bc4bb6f/tumblr_nz90mw4OiA1u1i4d4o6_500.jpg)